API Rate Limiting Strategies

Kalana Ravishanka

06 March,2024 •

7 mins read

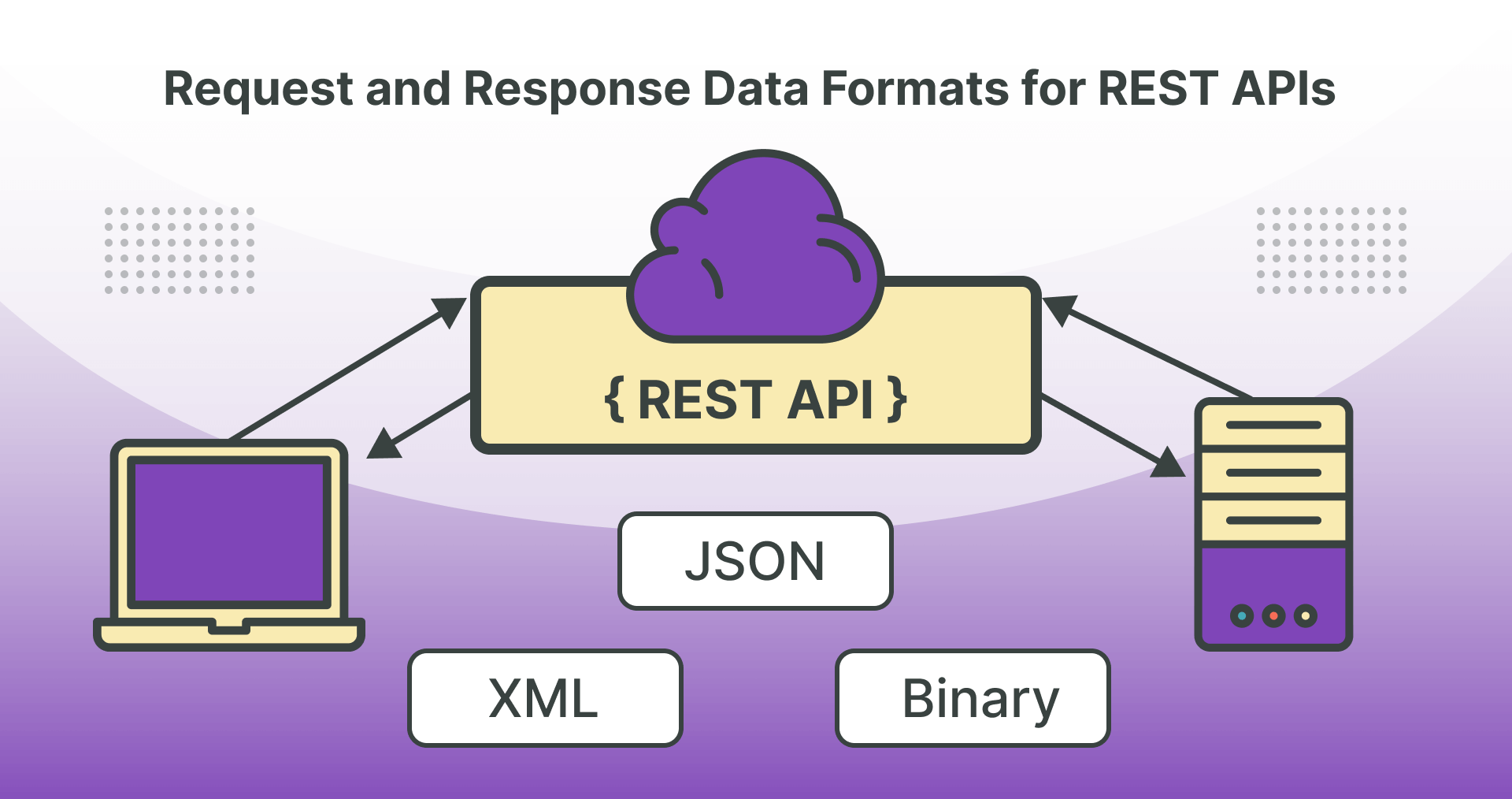

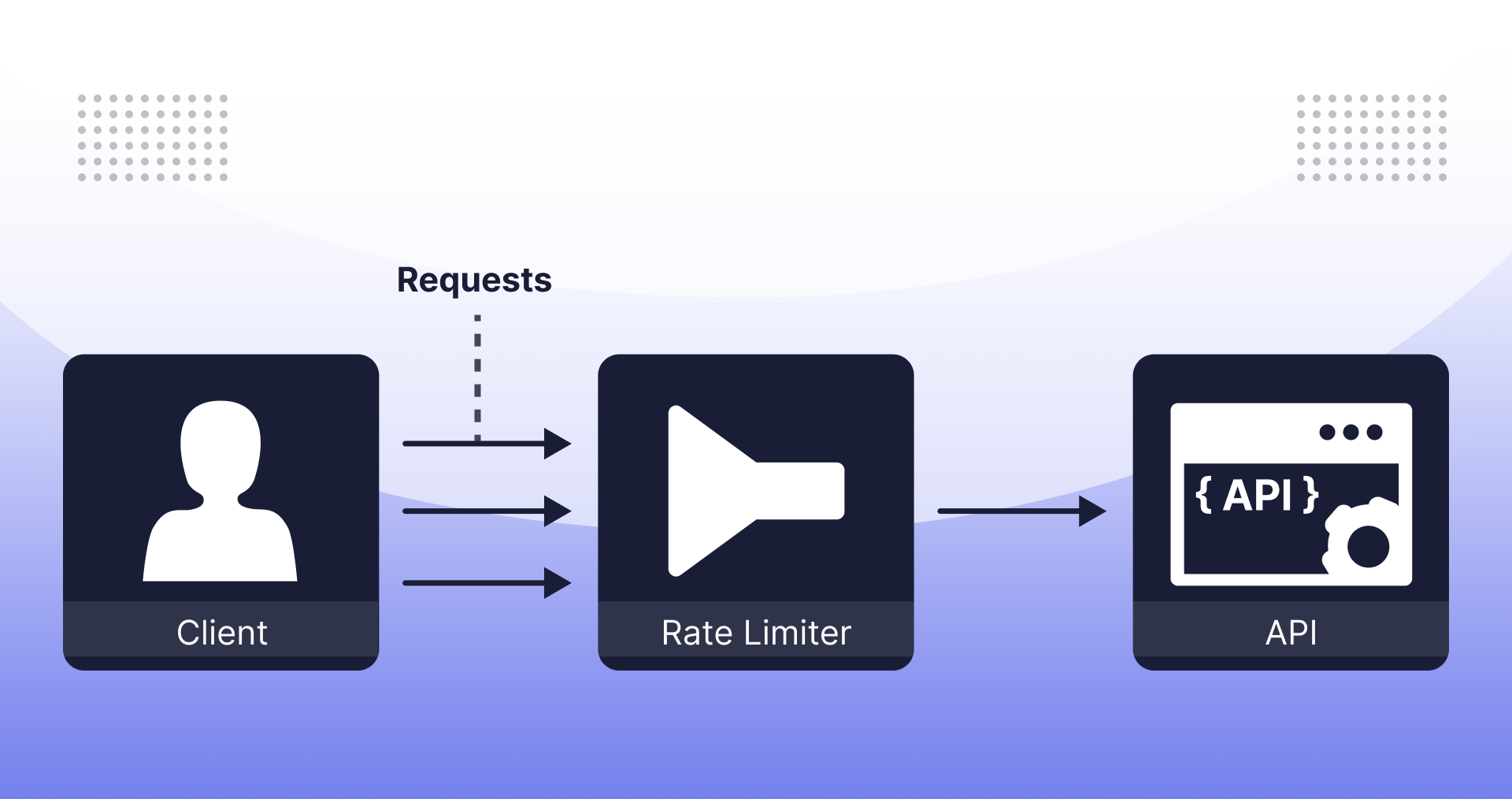

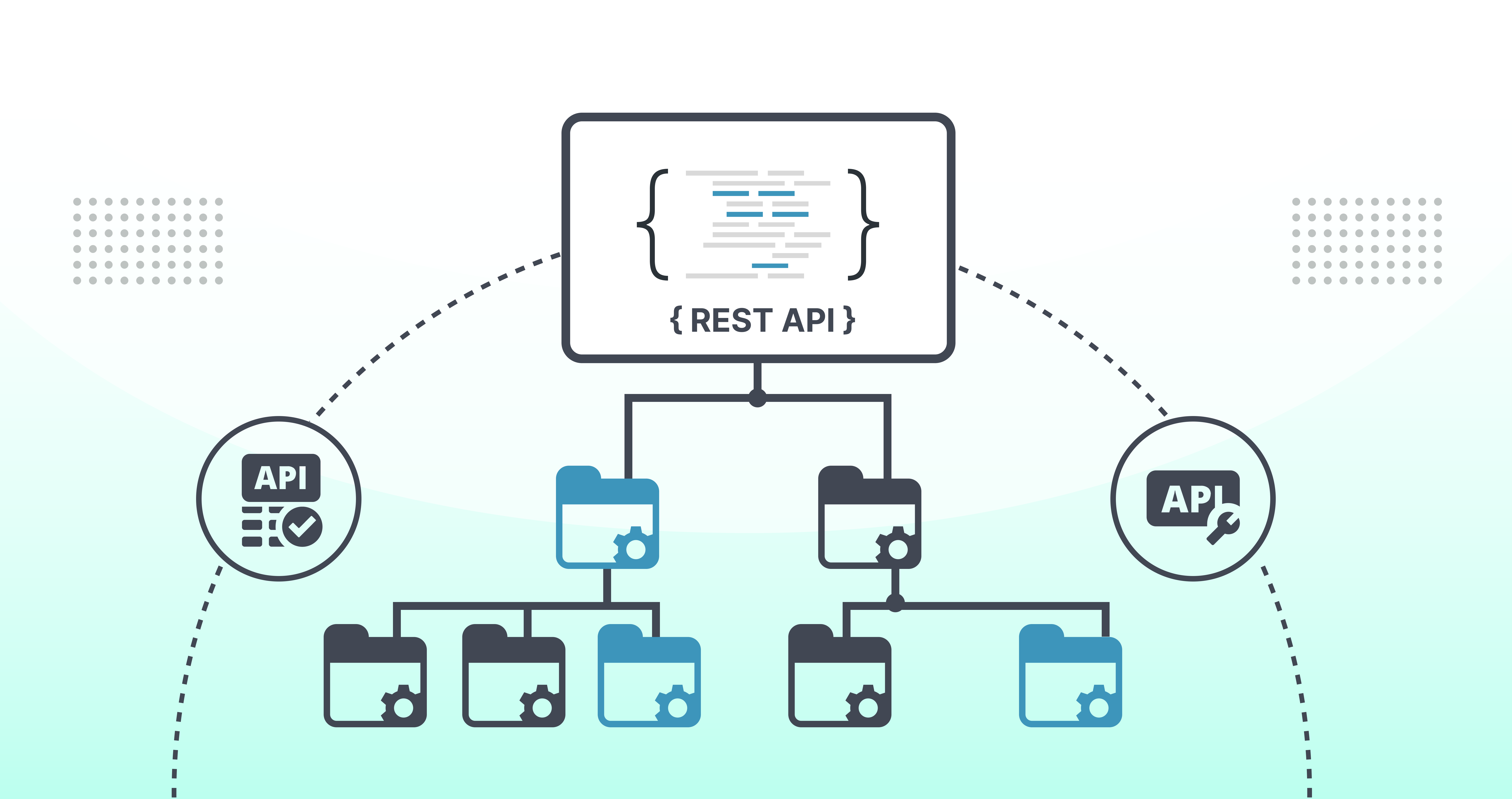

Rate limiting is an indispensable instrument in the web development and API design realm, that helps to combat abuse cases, maintain balanced utilization of resources for users or stay within established service limits while preserving stability. As the number of users requesting for web services and APIs increases, it has become necessary to ensure that proper rate limiting strategies are put into place so as to protect server resources while at the same time providing consistent quality user experience.

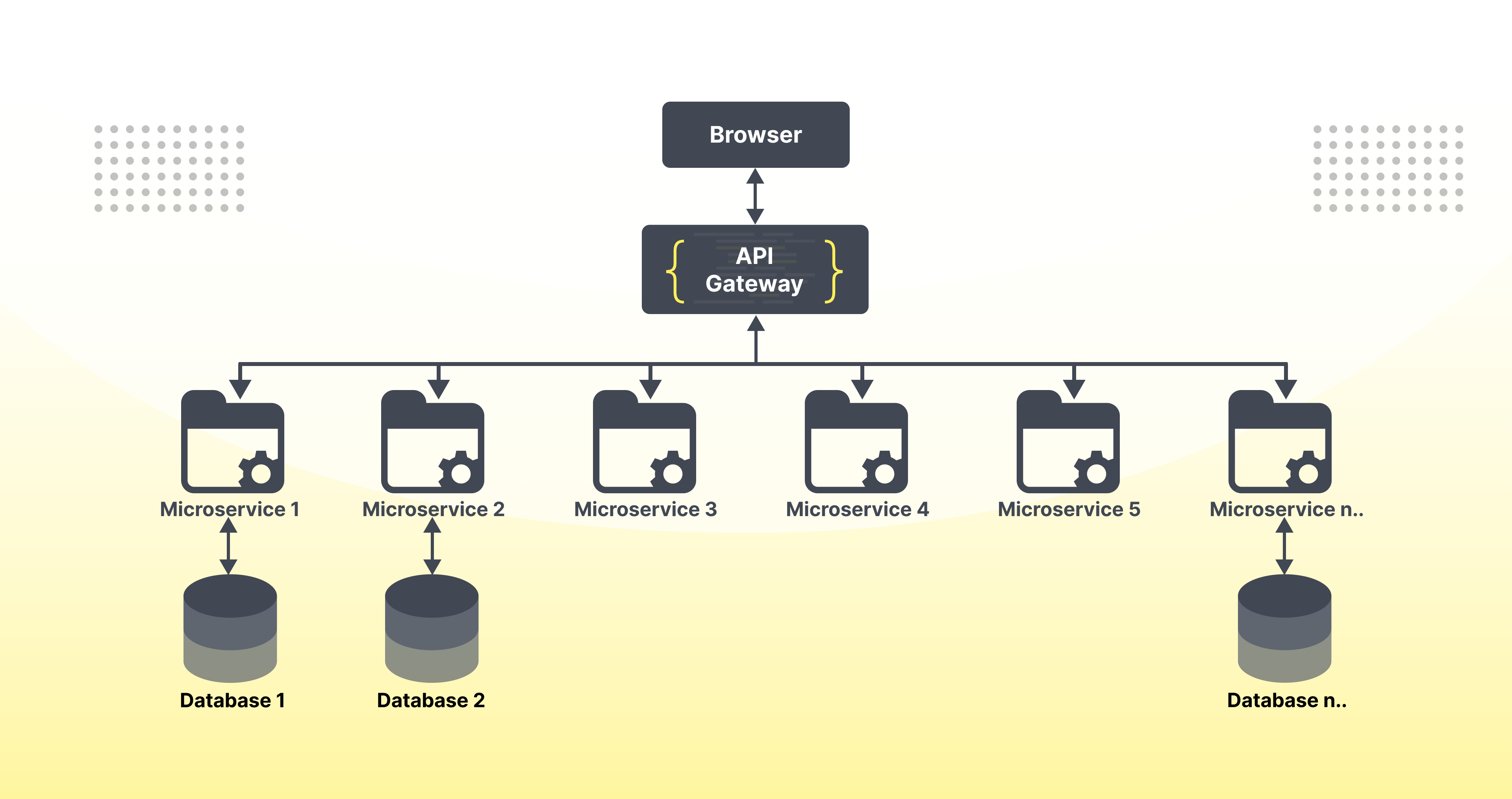

Rate limiting involves the regulation of requests from a client that target an API in order to obtain rate limits. The imposition of limits on the frequency or volume of requests can help API providers in curbing down the hazards associated with overloading their servers and using abusive data, thereby allowing them to efficiently manage traffic surges. Besides trying to regulate the scheme, rate limiting could also be used as a measure meant for promoting a fair use of an API by various clients and equal accessibility.

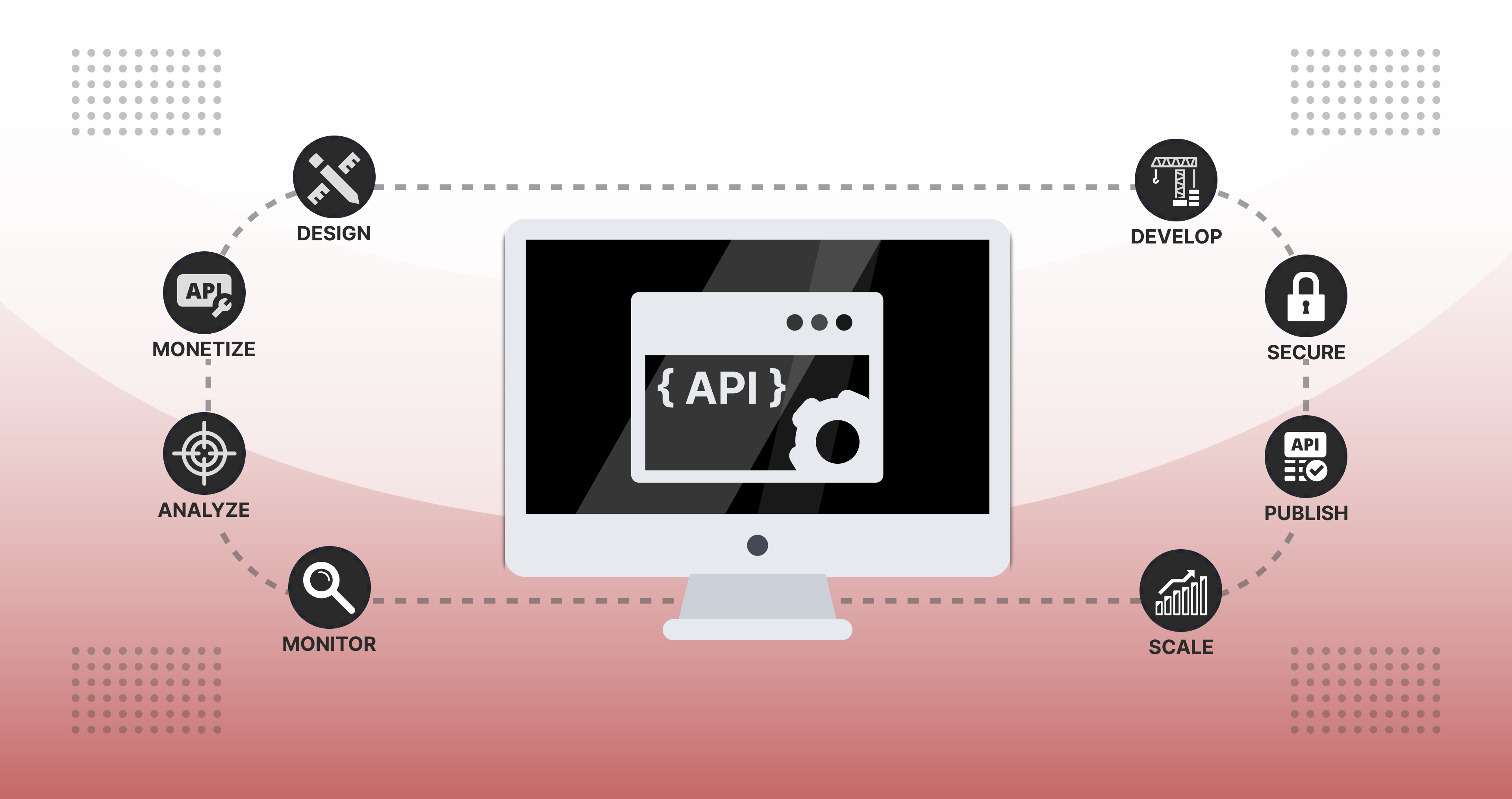

Implementing rate limiting in REST APIs is a subject that has to be analyzed well taking into account factors such as the kind of API endpoints, client applications needs and usage patterns. In order to avoid these issues, developers have a variety of strategies and techniques that they can use in an attempt at imposing rate limits without hindering legitimate uses. In this study, we will shed light upon some popular rate limiting strategies for REST APIs and the pros and cons of their implementation in our work.

-

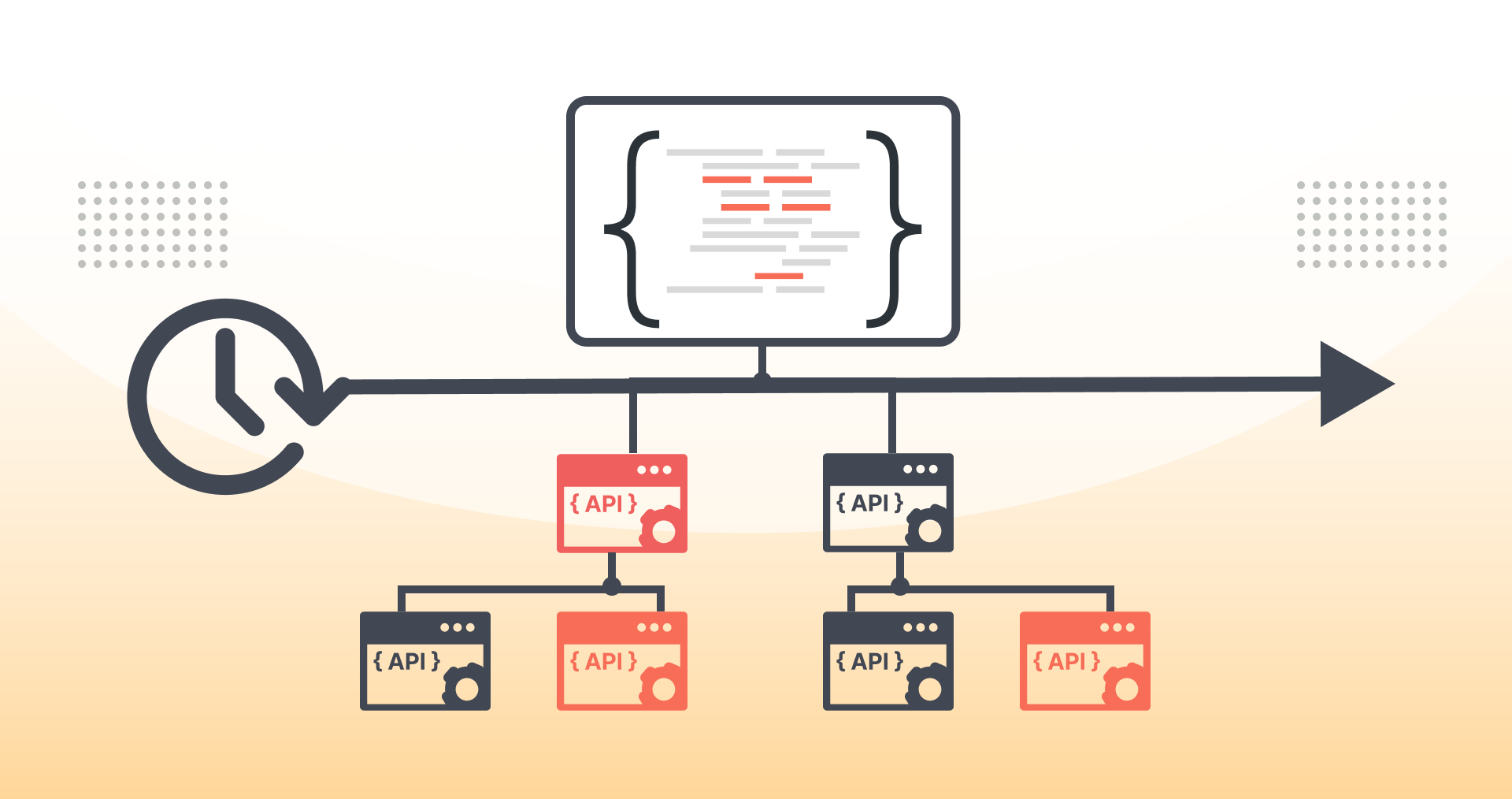

Token Bucket Algorithm:

At this level, there is no formal API strategy or plan in place. APIs are developed reactively, addressing immediate needs and retrieving specific data on an as-needed basis.

-

Sliding Window Algorithm:

The sliding window set of rules is any other powerful method for price restricting in REST APIs. This approach involves keeping a sliding time window that tracks the quantity of requests made by using a consumer inside a selected duration. By dividing the time window into smaller periods, along with seconds or minutes, API carriers can reveal request quotes with best granularity. When a patron exceeds the allowed request fee inside the time window, subsequent requests may be rejected or behind schedule. The sliding window algorithm gives particular manipulate over request charges and can adapt quickly to converting traffic patterns, making it appropriate for dynamic and unpredictable workloads.

-

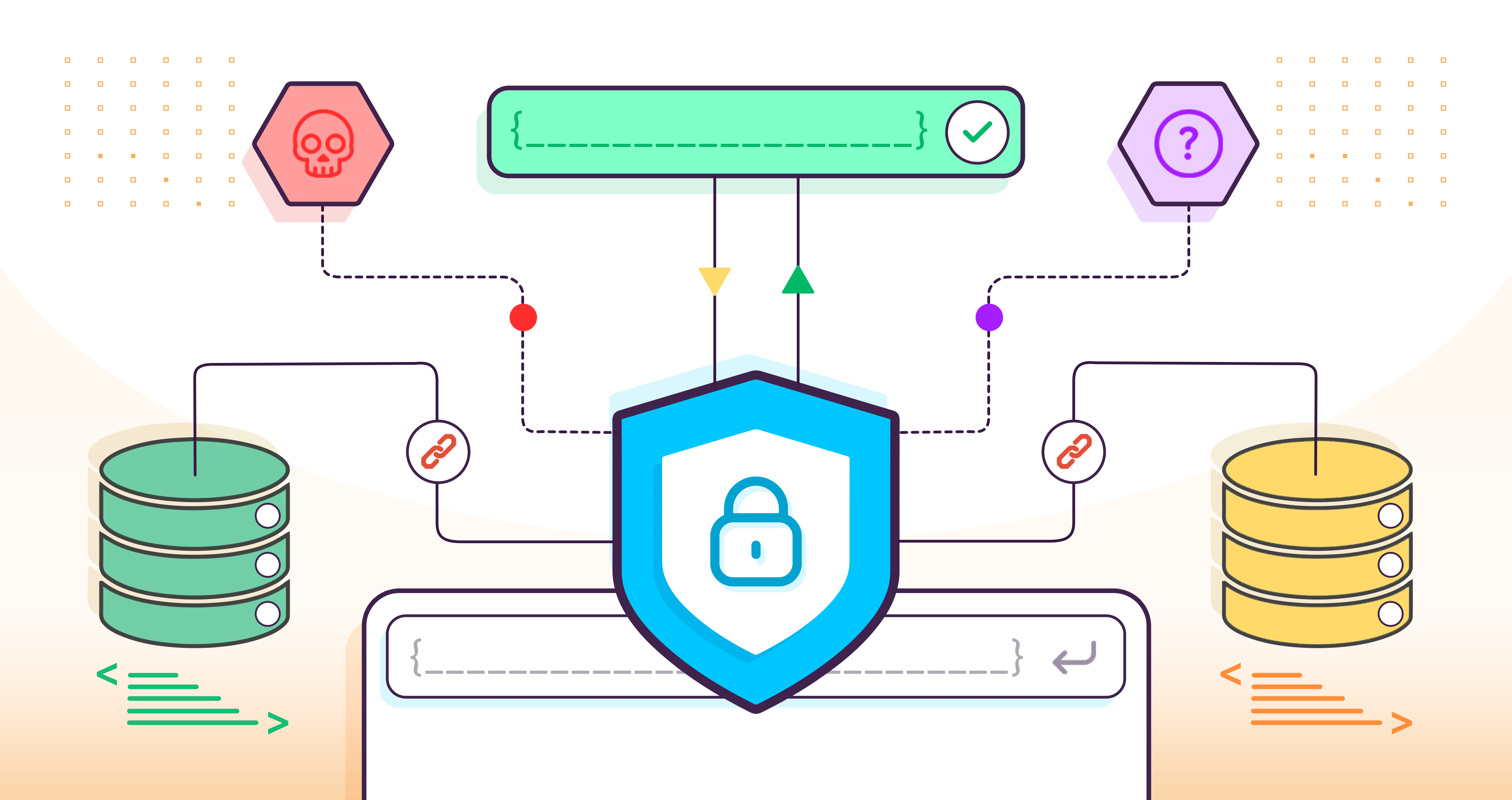

Distributed Rate Limiting:

In scenarios wherein API endpoints are deployed across a couple of servers or statistics centers, disbursed fee proscribing turns into essential. Distributed price limiting lets in API carriers to enforce regular fee limits throughout all instances of the API, regardless of the server managing the request. This may be accomplished thru centralized rate proscribing services, shared statistics shops, or inter-server conversation mechanisms. By synchronizing price restriction data and request counts across the dispensed infrastructure, API companies can preserve uniform price restricting guidelines and save you inconsistencies or loopholes which could stand up from server-specific price limits.

-

Dynamic Rate Limiting Rules:

Dynamic charge proscribing guidelines allow API vendors to adjust rate limits based on actual-time situations, consumer behavior, and system health. By leveraging telemetry data, consisting of request latency, error fees, and server load, API companies can dynamically adapt rate limits to accommodate fluctuating site visitors patterns and mitigate anomalous conduct. For example, at some point of periods of heavy load or carrier degradation, fee limits can be briefly adjusted to shield server resources and preserve service high-quality. Dynamic price restricting guidelines can also be used to use exceptional fee limits to wonderful patron classes, which includes unfastened and top class users, based on their subscription level or utilization records.

-

Throttling and Feedback Mechanisms:

Throttling mechanisms can supplement charge restricting techniques with the aid of offering remarks to customers approximately their request reputation and fee limits. When a purchaser exceeds the allowed request price, the API can go back particular HTTP reputation codes or errors messages to suggest the purpose for rejection or put off. Additionally, API responses may additionally include headers or metadata that deliver facts approximately final quota, reset intervals, and charge restrict usage. By incorporating clear and informative feedback into their APIs, carriers can help clients apprehend and adhere to price limits, troubleshoot troubles, and alter their conduct accordingly.

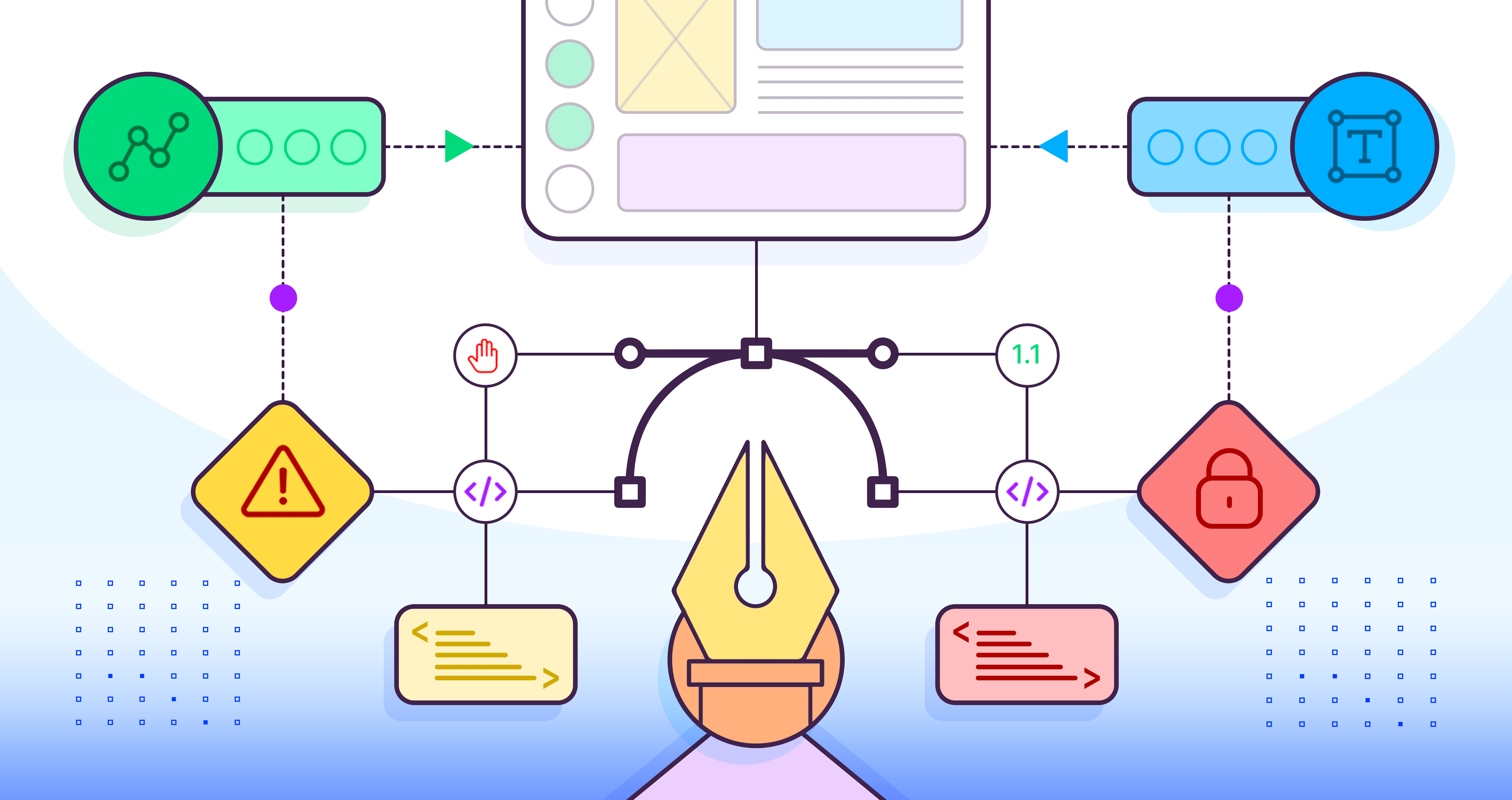

Best Practices for Implementation:

-

Clear Documentation:

Communicate charge limits virtually thru API documentation to help builders recognize the restrictions and plan their integrations for this reason.

-

Graceful Handling of Rate-Limited Requests:

Implement user-friendly blunders messages and HTTP fame codes to inform clients about rate-limiting regulations, enabling them to alter their behavior consequently.

-

Token Expiry and Refresh Mechanisms:

Consider incorporating token expiry and refresh mechanisms to prevent long-term abuse and make sure that customers re-authenticate after a sure duration.

-

Monitoring and Analytics:

Regularly reveal API usage styles and leverage analytics tools to discover capability abuse or unusual traffic spikes, allowing proactive changes to fee limits.

In end, imposing powerful charge limiting strategies is paramount for maintaining the reliability, security, and performance of REST APIs. By leveraging techniques including the token bucket set of rules, sliding window set of rules, allotted charge proscribing, dynamic charge proscribing guidelines, and throttling mechanisms, API vendors can set up strong charge restricting regulations that stability the desires of customers with the restrictions of server resources. Furthermore, careful consideration of purchaser expectancies, utilization styles, and business requirements is crucial for designing fee restricting strategies that sell honest and equitable get admission to to API resources. By adopting fine practices and staying attentive to evolving site visitors patterns, API providers can ensure that their fee proscribing mechanisms enable sustainable and green API usage even as safeguarding against misuse and abuse.

Kalana Ravishanka

Software Engineer at X-venture

.jpg)

.png)

.jpg)

.jpeg)